# LogBus2 User Guide

I. Introduction to LogBus2

LogBus2 is a log synchronization tool redeveloped on the basis of the original LogBus. Compared with the original LogBus, its memory footprint is reduced to 1/5 of the original, but its speed is increased by 5 times.

Logbus2 is mainly used to import back-end log data to the TA background in real time. Its core working principle is similar to that of Flume and Loggie. It will monitor the file flow in the log directory of the server. When any log file in the directory has new data, it will verify new data and send it to the TA background in real time.

We recommend the following types of users access data by using LogBus2:

- Users who use server SDK / Kafka / SLS to store data in TA format and upload data through LogBus2

- Users who have high requirements for accuracy and dimension of the data, whose data requirements cannot be met only depending on the client SDK, or for whom it is inconvenient to access the client SDK

- Users who don't want to develop the back-end data push process by themselves

- Users who need to transmit bulk historical data

- Users who have certain requirements for memory usage and transmission efficiency

# II. Download LogBus2

Latest version: 2.1.1.4

Update time: 2024-5-15

Linux-amd64 download (opens new window)

Linux-arm64 download (opens new window)

Windows version download (opens new window)

Mac Apple Silicon download (opens new window)

Mac Intel download (opens new window)

Docker Image (opens new window)

#

# III. Preparation before use

# File type

- Determine the directory where the uploaded data files are stored, and configure LogBus2. In this case, LogBus2 will monitor the file changes in the fc.

- Do not directly rename the uploaded data logs stored in the monitoring directory. Renaming the log is equivalent to creating a new file, and LogBus2 may upload these files again, resulting in data duplication.

- Since there is a snapshot of the current log transmission progress in the LogBus2 running directory, please do not work with the files in the runtime directory by yourself

# Kafka

- Determine the Kafka message format. In this case, Logbus will only process the value part of the Kafka Message

- Ensure that partitions are split based on userIDs to avoid out-of-order data

- Please enable the free use of Kafka Consumer Group to avoid the failure of multiple Logbus consumers

- Consume from the earliest by default. To consume from a specified location, you need to create a consumer group with a specific offset first.

# SLS

- Contact Alibaba Cloud to enable Kafka protocol support

# IV. Installation and update of LogBus2

# Installation

Download and decompress the LogBus2 installation package.

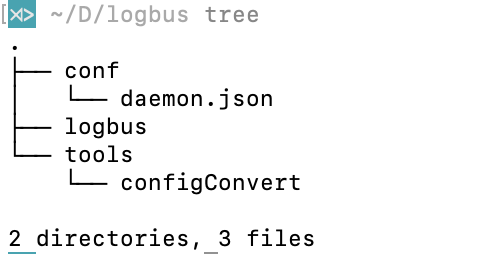

Decompressed directory structure:

- Logbus:LogBus2: binary file

- conf:

- daemon.json: configuration file template 2

- tools:

- configConvert: configuration conversion tool

# Update

Requirement: LogBus2 version ≥ 2.0.1.

Direct execution

./logbus update, execute after update.

./logbus start

# V. Use and configuration of Logbus2

# Start parameters

# Start

./logbus start

Stop

./logbus stop

# Restart

./logbus restart

# Check configuration and connectivity to TA system

./logbus env

Reset LogBus read records

./logbus reset

# Kafka is currently unavailable

View transmission progress

./logbus progress

# Kafka is currently unavailable

# Verify file format

./logbus dev

# Kafka is currently unavailable

# Configuration file guide

# Default configuration template

{

"datasource": [

{

"file_patterns": [

"/data/log1/*.txt",

"/data/log2/*.log"

],//file matching character

"app_id": "app_id",//app_id from the token of ta's official website, please get the APPID of the implementation project on the project configuration page of TA background and fill it here

},

],

"push_url": "http://RECEIVER_URL"//http transmission, please use http://receiver.ta.thinkingdata.cn/, if you enable privatization deployment, please modify the transmission URL to http://data acquisition address/

}

# Common configuration

# File

{

"datasource": [

{

"type":"file",

"file_patterns": ["/data/log1/*.txt", "/data/log2/*.log"], //file Glob matching rules

"app_id": "app_id", //APPID from the token of ta's official website, please get the APPID of the implementation project on the project configuration page of TA background and fill it here

"unit_remove": "day"| "hour", //file deletion unit

"offset_remove": 7,//unit_remove*offset_remove get the final removal time**offset must be greater than 0, otherwise it is invalid

"remove_dirs": true|false,//enable folder deletion or not NOTE: Only delete the folder after all files in the folder have been consumed

"http_compress": "gzip"|"none",//enable http compression or not,

}

],

"cpu_limit": 4, //limit the number of CPU cores used by Logbus2

"push_url": "http://RECEIVER_URL"

}

# Kafka

{

"datasource": [

{

"type":"kafka", //type: Kafka

"topic":"ta", //specific topic

"brokers":[

"localhost:9091" //Kafka Brokers address

],

"consumer_group":"logbus", //consumer group name

"cloud_provider":"ali"|"tencent"|"huawei", //cloud provider name

"username":"", //Kafka username

"password":"", //Kafka password

"instance":"", //cloud provider instance name

"protocol":"none"|"plain"|"scramsha256"|"scramsha512", //authentication protocol

"block_partitions_revoked":true,

"app_id":"YOUR_APP_ID"

}

],

"cpu_limit": 4, //limit the number of CPU cores used by Logbus2

"push_url": "http://RECEIVER_URL"

}

# SLS

NOTE: Please contact Alibaba Cloud to enable the SLS Kafka consumption protocol before SLS consumption

{

"datasource": [

{

"type":"kafka",

"brokers":["{PROJECT}.{ENTRYPOINT}:{PORT}"], //NOTE: see [https://www.alibabacloud.com/help/en/log-service/latest/endpoints#reference-wgx-pwq-zdb](https://www.alibabacloud.com/help/en/log-service/latest/endpoints#reference-wgx-pwq-zdb) for details

"topic":"{SLS_Logstore_NAME}", //Logstore name

"protocol":"plain",

"consumer_group":"{YOUR_CONSUMER_GROUP}", // ConsumerGroup

"username":"{PROJECT}", // Project name

"disable_tls":true,

"password":"{ACCESS_ID}#{ACCESS_PASSWORD}", // authorization by Alibaba Cloud RAM

"app_id":"YOUR_APP_ID"

}

],

"push_url": "http://RECEIVER_URL"

}

# Complete configuration item

# Configuration item list and description

| Configuration | Type | Example | Required field | Description |

|---|---|---|---|---|

| cpu_limit | Number | 4 | Limit the maximum allowable number of CPU cores used by Logbus2 | |

| push_url | String | ✔️ | Receiver address, starting with http/https. | |

| datasource | Object list | ✔️ | Data source list |

# datasource (data source configuration)

# File

| Configuration | Type | Example | Required field | Default value | Description |

|---|---|---|---|---|---|

| app_id | String | ✔️ | "" | Data reporting project appid | |

| appid_in_data | Bool | false | false | When set to true, logbus will use appid from data instead rather than the configure value. | |

| specified_push_url | Bool | false | True: do not parse push_url, and send it as the push_url configured by the user, that is, http://yourhost:yourport. False: After parsing the push_url, send it according to the logbus url specified by the receiver, namely http://yourhost:yourport/logbus. | ||

| add_uuid | Bool | false | True: Add the uuid property in each piece of data or not (the transmission efficiency will reduce if enabled). | ||

| file_patterns | String list | ✔️ | [""] | Directory wildcards are supported, but regular expressions are not supported at this time. If without special configuration, it is bypassed by default. Files suffixed with gz/.iso/.rpm/.zip/.bz/.rar/.bz2 | |

| ignore_files | String list | [""] | Files filtered in file_patterns | ||

| unit_remove | String | "" | Delete user files. Delete by day or hour. Note: If no configuration file is automatically deleted, the memory footprint of LogBus2 will gradually increase | ||

| offset_remove | Int | 0 | Delete user files. When offset_remove>0 and unit_remove is configured by day or hour, the user file deletion function can be enabled. | ||

| remove_dirs | Bool | true|false | false | Delete the folder or not | |

| http_timeout | String | 500ms | 600s | Timeout when sending data to receiver, default value: 600s. Range: 200ms - 600s. Support milliseconds "ms", seconds "s", minutes "m", hours "h". | |

| iops | int | 20000 | 20000 | Limit Logbus data traffic per second (number of items) | |

| limit | bool | true|false | false | Turn on the speed limit switch | |

| http_compress | String | none | gzip | none | Format of data compression when sending http. none=no compression. Default value: none. |

NOTE: The max_batch_size is verified first, followed by batch length verification. If the last interval is 2 seconds, it is forced to send.

# Kafka

NOTE: Before enabling the Logbus Kafka mode, be sure to enable the free use of the Consumer Group

Configuration | Type | Example | Required field | Default value | Description |

|---|---|---|---|---|---|

| brokers | String List | ["localhost:9092"] | ✔️ | [""] | Kafka Brokers |

| topic | String | "ta-msg-chan" | ✔️ | "" | Kafka topic |

| consumer_group | String | "ta-consumer" | ✔️ | "" | Kafka Consumer Group |

| protocol | String | "plain" | "none" | Kafka authentication mode | |

| username | String | "ta-user" | "" | Kafka username | |

| password | String | "ta-password" | "" | Kafka password | |

| instance | String | "" | "" | CKafka instance ID | |

| fetch_count | Number | 1000 | 10000 | Number of messages per Poll | |

| fetch_time_out | Number | 30 | 5 | Poll timeout | |

| read_committed | Bool | true | false | Consume Kafka UnCommitted data or not | |

| disable_tls | Bool | true | false | Disable tls verification | |

| cloud_provider | String | "tencent" | "" | Enabled when the public network is connected to Kafka. Currently, the following cloud providers provide this service: tencent, huawei, ali | |

| block_partitions_revoked | Bool | false | false | Block consumption or not. If disabled, data duplication will occur when multiple Logbuses are in the same consumer_group | |

| auto_reset_offset | String | "earliest" | "earliest" | A parameter that specifies the default behavior when there are no committed offsets |

NOTE: Logbusv2 currently consumes Kafka in load balance mode, and the number of Logbusv2 deployments is ≤ partition num

# Monitoring configuration and dashboard construction

Please view: Monitoring Configuration DEMO (opens new window)

# Alert configuration

Please view: Alert Configuration DEMO (opens new window)

# Plugin use

Please view: (opens new window)Plugin Configuration DEMO (opens new window)

# VI. Advanced use

# Report multiple events through a single Logbus

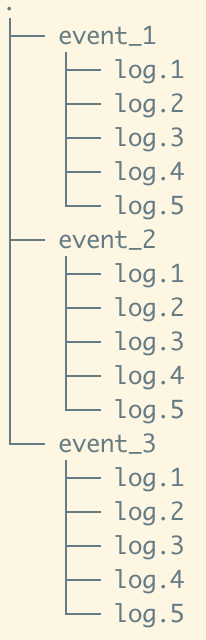

In the case of single Logbus deployment, due to IO limitations, there may be situations where some information is consumed with a delay, for example

Due to polling, the consumption order is event_/log.1 -> event_/log.2 -> event_*/log.3. In this case, file consumption will slow down. You can enable multiple LogBuses to cut logs without contextual meaning through GloBs so that the files matched by GloBs can be uploaded in parallel

# Multiple PipeLine configuration

NOTE: The appid cannot be repeated under multiple PipeLine

{

"datasource": [

{

"file_patterns": ["/data/log1/*.txt", "/data/log2/*.log"], //file Glob matching rules

"app_id": "app_id", //APPID from the token of ta's official website, please get the APPID of the implementation project on the project configuration page of TA background and fill it here

"unit_remove": "day"| "hour", //file deletion unit

"offset_remove": 7,//unit_remove*offset_remove get the final removal time**offset must be greater than 0, otherwise it is invalid

"remove_dirs": true|false,//enable folder deletion or not NOTE: Only delete the folder after all files in the folder have been consumed

"http_compress": "gzip"|"none",//enable http compression or not

},

{

"file_patterns": ["/data/log1/*.txt", "/data/log2/*.log"], //file Glob matching rules

"app_id": "app_id", //APPID from the token of ta's official website, please get the APPID of the implementation project on the project configuration page of TA background and fill it here

"unit_remove": "day"| "hour", //file deletion unit

"offset_remove": 7,//unit_remove*offset_remove get the final removal time**offset must be greater than 0, otherwise it is invalid

"remove_dirs": true|false,//enable folder deletion or not NOTE: Only delete the folder after all files in the folder have been consumed

"http_compress": "gzip"|"none",//enable http compression or not

}

],

"cpu_limit": 4, //limit the number of CPU cores used by Logbus2

"push_url": "http://RECEIVER_URL"

}

# LogBus2 On Docker

# Pull the latest mirror

docker pull thinkingdata/ta-logbus-v2:latest

# Create a persistent folder on the host and initialize the configuration file

mkdir -p /your/folder/path/{conf,log,runtime}

touch /your/folder/path/daemon.json

vim /your/folder/path/daemon.json

⚠️ Warning: Do not delete any file in the runtime directory by yourself

# Modify the configuration template and write to daemon.json

{

"datasource": [

{

"type":"file",

"app_id": "YOUR APP ID",

"file_patterns": ["/test-data/*.json"],

"app_id":""

},

{

"type":"kafka",

"app_id": "YOUR APP ID",

"brokers": ["localhot:9092"],

"topic":"ta-message",

"consumer_group":"ta",

"app_id":""

}

],

"push_url": "YOUR PUSH URL WITHOUT SUFFIX OF/logbus"

}

# Mount the data folder and start LogBus

docker run -d \

--name logbus-v2 \

--restart=always \

-v /your/data/folder:/test-data/ \

-v /your/folder/path/conf/:/ta/logbus/conf/ \

-v /your/folder/path/log/:/ta/logbus/log/ \

-v /your/folder/path/runtime/:/ta/logbus/runtime/ \

thinkingdata/ta-logbus-v2:latest

# LogBus2 On K8s

# Prepare the environment

- Kubectl can connect to the k8s cluster and has deployment permissions.

- Installing dependencies: install helm to the local command line according to the helm file https://helm.sh/zh/docs/intro/install/

# Download the logbus v2 helm file

download link (opens new window)

tar xvf logBusv2-helm.tar && cd logbusv2

# Configure logbus

# Preparations

- Create the log pvc to be uploaded on the console

- Get the pvc name and confirm the namespace

- Get TA's app id, receiver url

# Modify values.yaml

pvc:

name: pvc name

logbus_version: 2.1.0.2

namespace: namcspace name

logbus_configs:

- push_url: "http://receiver address of TA upload data"

datasource:

- file_patterns:

- "container:wildcard of the relative path to the file" # Do not delete the prefix "container:"

- "container: wildcard of the relative path to the file" # Do not delete the prefix "container:"

app_id: app id of TA system

# yaml for preview rendering

helm install --dry-run -f values.yaml logbus .

# Use helm to deploy logbusv2

helm install -f values.yaml logbus-v2 .

Check the created statefulset

kubectl get statefulset

Check the created pod

kubectl get pods

Update the LogBus in K8s

vim value.yaml # Modify the previous value.yaml file

# Modify logbus_version to the latest NOTE: Considering backward compatibility, it's better not to use the latest!

logbus_version:2.0.1.8 -> logbus_version:2.1.0.2

# save and exit

helm upgrade -f values.yaml logbus .

# Wait for rolling update

# Notes

Logbusv2 has read and write permissions on the pvc of the mounted log.

Logbusv2 writes file consumption records and running logs to PVC respectively according to pod. If PVC deletes logbus-related records, there is a risk of data retransmission.

# Configuration details

Execute command:

helm show values .

Show available configurations:

# Default values for logbusv2.

# This is a YAML-formatted file.

# Declare variables to be passed into your templates.

pvc:

name: pvc-logbus

logbus_version: 2.1.0.2

namespace: big-data

logbus_configs:

#### pod 1

#### push_url: receiver url, need http:// https:// prefix

- push_url: "http://172.17.16.6:8992/"

datasource:

- file_patterns:

#### target files relative path in pvc

- "container:/ta-logbus-0/data_path/*"

#### TA app_id

app_id: "thinkingAnalyticsAppID"

#### pod 2

- push_url: "http://172.26.18.132:8992/"

datasource:

- file_patterns:

- "container:/ta-logbus-1/data_path/*"

app_id: "thinkingAnalyticsAppID"

#### pod 3

- push_url: "http://172.26.18.132:8992/"

datasource:

- file_patterns:

- "container:/ta-logbus-2/data_path/*"

app_id: "thinkingAnalyticsAppID"

#### logbus pod requests

#requests:

# cpu: 2

# memory: 1Gi

requests not clearly configured will not be reflected in yaml.

# pvc order catalog

pvc:

name: write the actual pvc name

namespace: existing namespace

logbus_configs:

- push_url: http or https, write the TA receiver URL that the pod can access

datasource:

- file_patterns:

- "container:/ta-logbus-0/data_path/*" "container:"placeholder. During yaml deployment, the relative path is replaced with an absolute path that the container can access. When configuring the directory, the directory is prefixed with container:.

app_id: "thinkingAnalyticsAppID" TA system app id

Multiple directories in pvc

When reading multiple directories in pvc, it is recommended to deploy them in pods, and each pod is responsible for a folder. This allows for better deployment performance and security.

pvc:

name: pvc-logbus

namespace: big-data

logbus_configs:

#### pod 1

#### push_url: receiver url, need http:// https:// prefix

- push_url: "http://172.17.16.6:8992/"

datasource:

- file_patterns:

#### target files relative path in pvc

- "container:/ta-logbus-0/data_path/*"

#### TA app_id

app_id: "thinkingAnalyticsAppID"

#### pod 2

- push_url: "http://172.26.18.132:8992/"note that each app id and push url need to be configured separately

datasource:

- file_patterns:

- "container:/ta-logbus-1/data_path/*"

app_id: "thinkingAnalyticsAppID"

#### pod 3

- push_url: "http://172.26.18.132:8992/"

datasource:

- file_patterns:

- "container:/ta-logbus-2/data_path/*"

app_id: "thinkingAnalyticsAppID"

# Multi-pvc

Currently, it only supports the deployment of a single pvc, and multi-pvc requires multiple configurations of values.yaml file

# VII. FAQs

Q: Why is folder deletion enabled, but LogBus does not delete the folder?

A: The prerequisite for LogBus to delete a folder is that the files in the current folder have been read by LogBus and there is no file in the folder, then folder deletion will be triggered

Q: Why can't the log be uploaded?

A: In the data files read by LogBus, there should be no line breaks in a single piece of data. The configured data file does not support regular expressions, only wildcard (Glob) is used. Can the configured data file rules be matched to files

Q: Why are files uploaded repeatedly?

A: In the data files read by LogBus, there should be no line breaks in a single piece of data. The configured data file does not support regular expressions, only wildcard (Glob) is used. Can the configured data file rules be matched to files

Q: Why data skewing

A: Currently TE uses distinct_id as data UUID for shuffle. When using the same string for the distinct_id of massive data, it may lead to the increase of memory pressure on a single machine, thus increasing the risk of data skew.

# VIII. Releases Note

# Version: 2.1.1.4 --- 2024.5.15

Optimizations:

- Kafka source now supports

fetch_max_partition_bytes - DataSource supports skipping remote validation, default off

- Utilizing SIMD for accelerated JSON processing

# Version:2.1.1.3 --- 2024.2.23

Optimizations

- Enhanced Kafka Progress experience

- Added support for the

auto_reset_offsetparameter - Logged Kafka Client related information for improved logging

Bug Fixes

- Force shutdown causing Logbus to kill the wrong process

- Memory leak issue

- Goroutine leak problem

- Kafka timeouts not being consumed

- Failure to start on Windows version

- Inability to update on Windows version

# Version:2.1.1.2 --- 2024.2.2

Improve

- i18n log format

# Version:2.1.1.1 --- 2024.1.10

Improve

- The accuracy of the

progresscommand.

# Version:2.1.1.0 --- 2023.12.11

- Fix

- The default values for logs have been adjusted to retain logs for 7 days by default, with individual files split at 100MB and a maximum retention of 30 log files. Without upgrading, the configuration can also be set using the log configuration option.

- Impact

- In the case of generating a large amount of unparseable error data in the data source, the logging of these errors can lead to the log files becoming increasingly large.

# Version:2.1.0.9 --- 2023.10.26

Add

- Support for event filters allows data filtering to be performed on the client side.

- Support for space detection within the logbus directory.

# Version:2.1.0.8 --- 2023.6.06

Improve

- Ensuring proper closure of plugins is essential.

- Optimizing process communication and log output is crucial for improving overall system performance.

Fix

- CPU limit log output

- linux arm version compile

# Version:2.1.0.7 --- 2023.4.07

Add

- Kafka Source support command

progress

Improve

- Custom tags support retrieving environment variables

# Version:2.1.0.6 --- 2023.3.28

Improve

- Support for custom tags to trace data sources

- Support for custom plugin delimiters

# Version:2.1.0.5 --- 2023.2.20

Improve

- Data streaming project supports arrays, compatible with numeric and string primitive types

- Monitoring metric calculation logic

# Version:2.1.0.4 --- 2023.1.12

Add

- Support configuration to read data from files without newline characters

- Support configuring the number of iterations and interval time for cyclic reading

Fix

- Fix concurrency bugs in monitoring metric statistics

# Version:2.1.0.3 --- 2022.12.23

Add

- Allow overriding internal appid data through configuration

Fix

- Use appid_in_data and remove the default value for appid

# Version: 2.1.0.2 --- 2022.12.13

Add

- Plugin supports property splitting

Repair

- Create meta_name under multiple pipelines

# Version: 2.1.0.1 --- 2022.11.29

Add

- The kafka data source supports transactional read committed

- Plugin command supports sh environmental dependencies

Repair

- Create meta_name without appid configuration

# Version: 2.1.0.0 --- 2022.11.22

Add:

- Data distribution: distribute data to different projects according to the configuration appidMap

- Kafka data source supports multi-topic consumption

- Limiter, to limit the reporting speed and reduce the server pressure

- Multiple pipelines perform data reporting

- Custom plugin parser based on grpc

- Real-time performance monitoring (prometheus, pushgateway, grafana)

- Add lz4 " lzo to the compaction algorithm

Repair:

- Fix the bug where logbus is unable to stop under the kafka data source

- Fix the bug that the file is too active to jump out under the file data source

- Fix the file monitor closing issue

# Version: 2.0.1.8 --- 2022.07.20

Add:

- dev (format verification command)

- Kafka Source

- Multi-platform

Repair:

- Problem with waking up file transmission several times

- Less log volume

Progresssorting by file transmission time- Multi-pipeline optimization

Docker imagestreamlining

# Version: 2.0.1.7 --- 2022.03.01

Optimization:

- Operating efficiency, higher performance

- File deletion logic

- Location file export logic

- Memory footprint